Model HQ

DocumentationUsing OpenAI or Anthropic Models

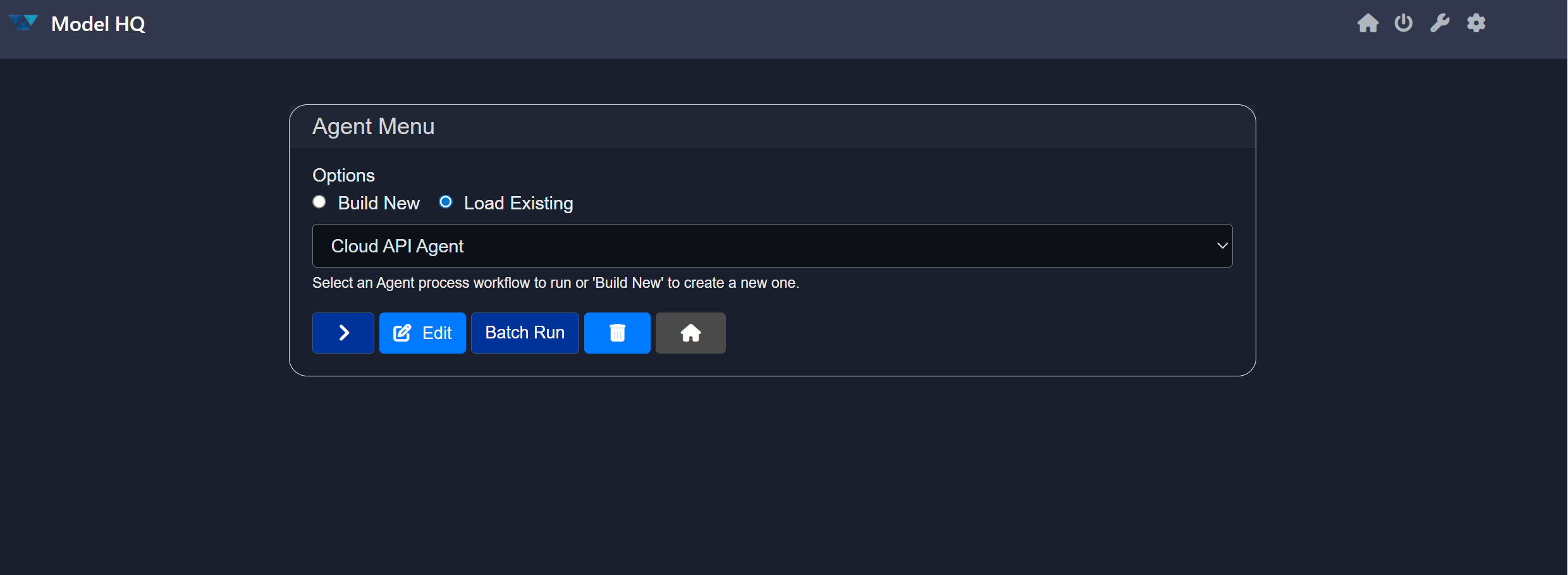

Frontier Models such as OpenAI or Anthropic can be utilized in building Agent workflows in Model HQ. Users can access a short example via:

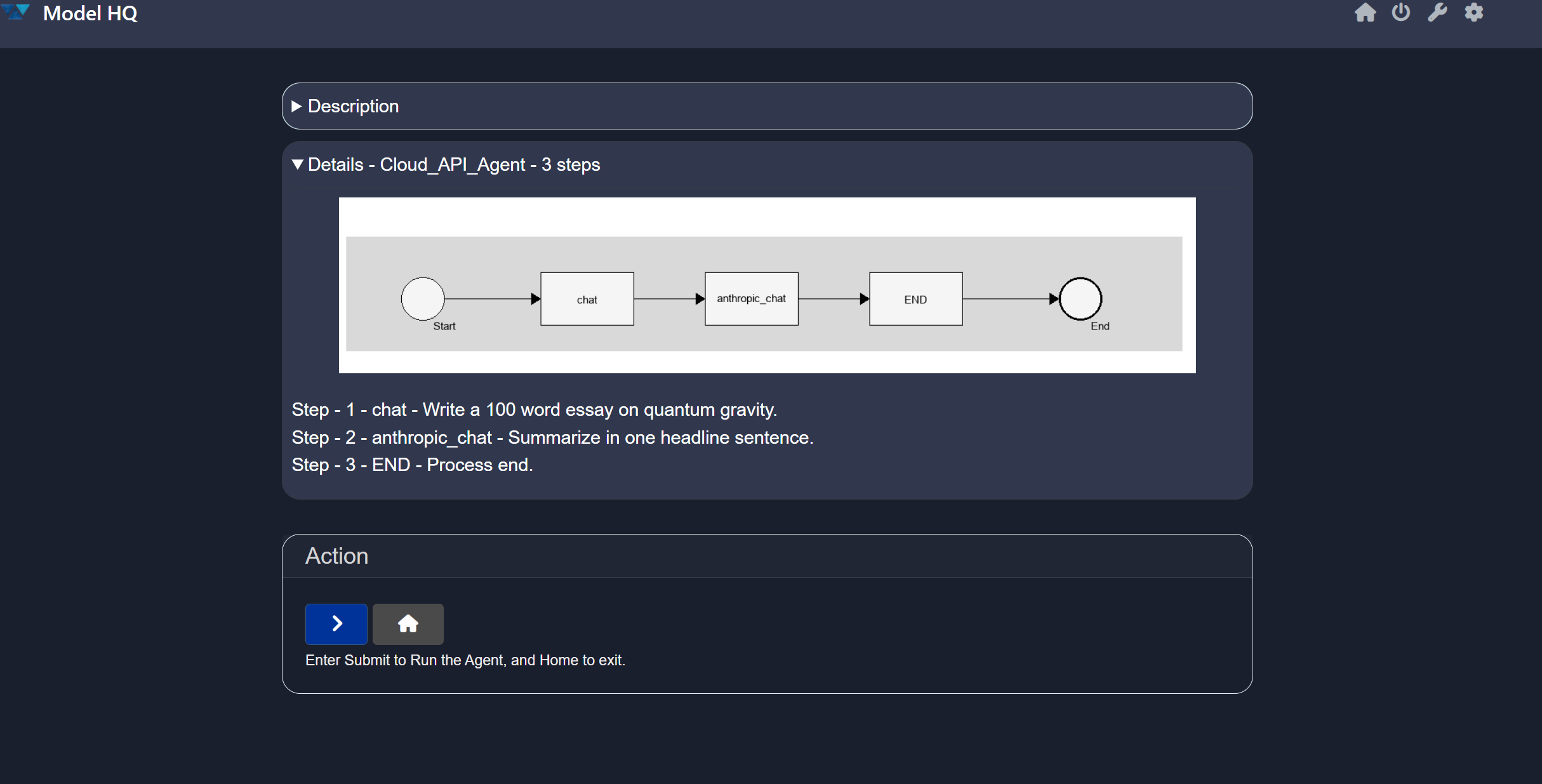

Agents > Cloud API Agent > Details

This allows quick testing to verify whether the API connection is successful.

Default Setup

The template Agent workflow is set to Anthropic by default.

To use this workflow successfully, you must enter your API key for the desired model in:

Configs (top right corner) > Credentials > Anthropic API key

Switching to OpenAI

To change the Agent workflow test to OpenAI, follow these steps:

- Go to:

Agents > Cloud API Agent - Click on:

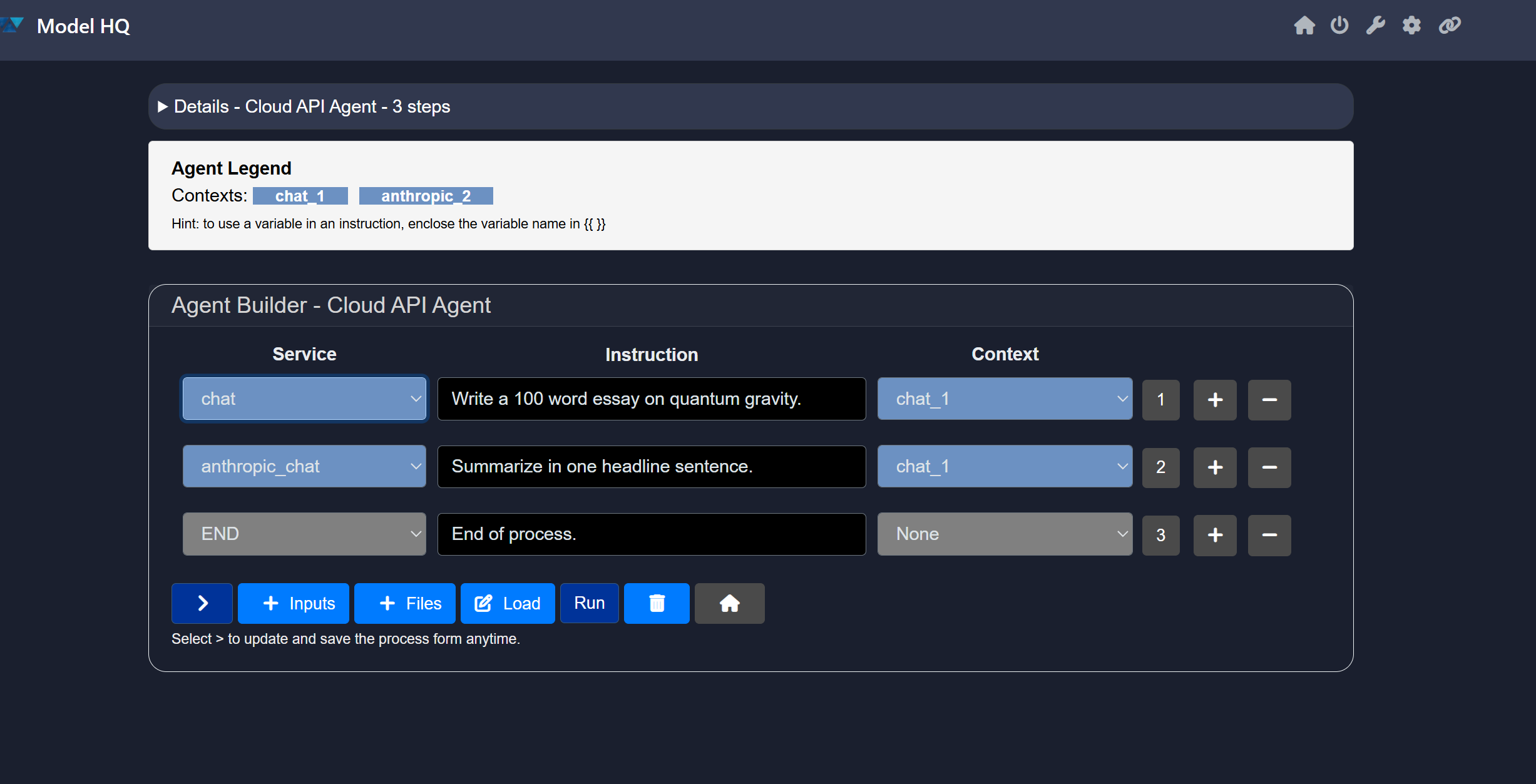

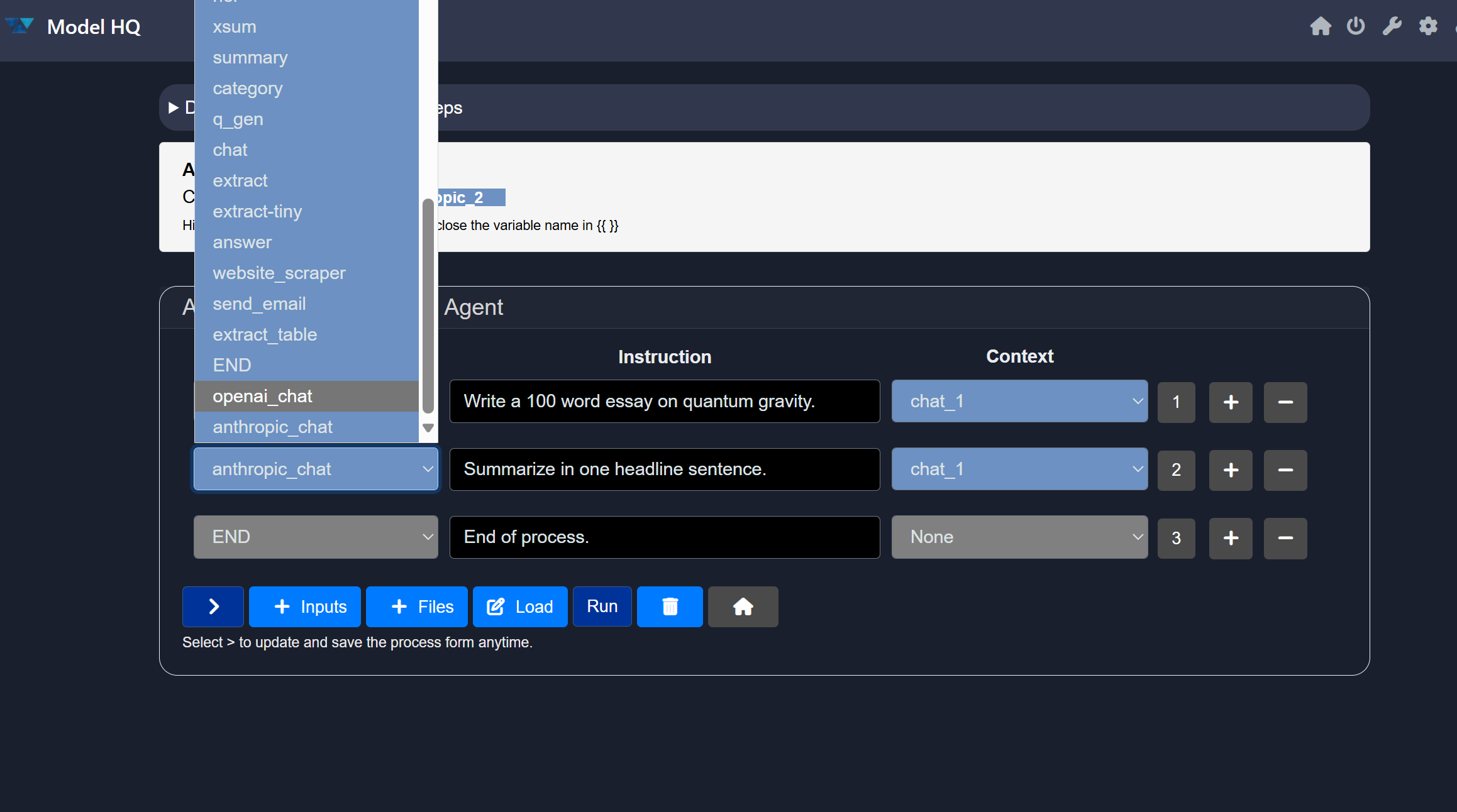

Edit > Process

- Locate the second row and change:

anthropic_chat → openai_chat

- Click

>to proceed.

The first step of the process (

chat) uses a default local model. It does not use an external model and is intended as a quick test to ensure your models are connected properly.

Make sure to set the Context of

Chat_1toNonein the first row. (the context of OpenAI should correctly remain asChat_1)

There is no context needed for this model to provide a valid answer. This option may become available only after clicking>following the model change in the Cloud API Agent workflow.

When you upload a CSV to the OpenAI API, the most common reason for errors is that the API doesn’t directly accept raw

.csvfiles for all endpoints.

The API also does not process compressed archives (.zip,.tar,.rar, etc.). If you try to upload one, you’ll get an error.

Final Steps

- Click

Run > >run the agent with OpenAI as new llm provider: - You will see a completed Agent workflow similar to the responses below.

This confirms that you are now able to successfully utilize Anthropic or OpenAI in your Agent workflow.